Introduction ⚓

This week, the time has come to bring up additional VMs and set up some proper

networking between them. This lecture on networking will be delivered by Ondra

Hruby, who also prepared the before class reading.

Before class reading

Network architecture and abstraction layers

We don’t want you to get bored with paragraphs of theory about ISO/OSI model, etc. But the concept of layers is so fundamental so we have to at least mention it. We will try to offer you a rather practical view on layers, and will try to explain how this theory maps to written software and bytes “running on the wires”.

You’ve probably heard that the network stack of a machine or an intermediate device (such as a switch or router) is divided into so called layers, and that each layer is responsible for handling a different aspect of the network communication. That’s true, but it is a very theoretical definition, one can hardly imagine what that means in reality.

Let’s look at this from the perspective of a software engineer (or more precisely kernel engineer), who writes the network stack: In order to exchange a message between two machines, different components of software, and also hardware, must cooperate together in order to transfer the message from one machine to another. First, you have a network card, which is a piece of hardware, in which you usually plug the network cable. The chips of the card have baked-in or loaded firmware – a piece of software, which controls the hardware. Then you have a network card driver – another piece of software, which is loaded in the kernel, and which talks to your network card firmware via some kind of hardware bus (e.g. PCI, or USB). Then, you have several parts of the kernel itself, responsible for maintaining available network interfaces, traffic routing through these interfaces, etc. And then another part of the kernel, responsible for e.g. reliable delivery of messages, retransmitting of the messages in the case the message gets lost in transit, and also providing an API for applications which want to communicate over a network. Eventually, you have the applications and libraries used by the applications.

What we’ve described is very close to the definition of distinct layers of a network architecture. Each layer can be viewed as a separate software component with well defined responsibility but the exact interface between the layers is not defined, as well as not the exact message format.

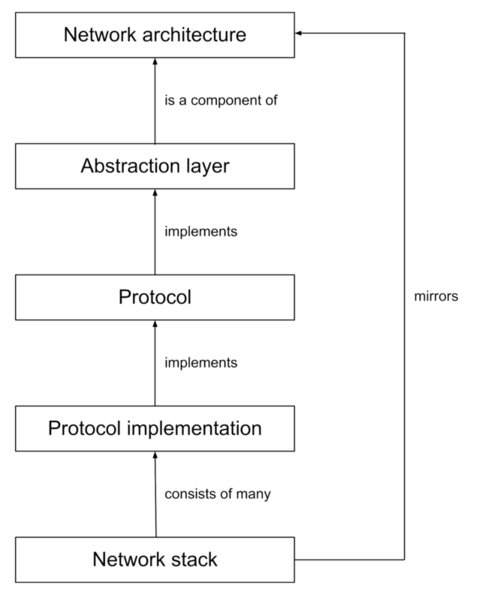

We have used terms: network stack, layer, network architecture, and we need one more term – a protocol. Let’s try to define these terms more formally and explain the relationship between them.

Network architecture

Network architecture is a very high-level and abstract umbrella term. In a broader sense it can include all facts about a concrete network, including its topology, used devices, protocols, protocol implementations, etc. In the narrower sense, if a network uses a particular network architecture, the architecture dictates how many abstraction layers are used by the devices of that network when exchanging data. Not all devices have to implement all layers. Usually end-devices implement all of them, whereas intermediate devices implement few of them – this is also given by the architecture. Network architecture can enforce usage of a particular protocol(s) on a particular layer(s). For example, it is characteristic for TCP/IP architecture that it uses IP protocol on its network layer. And we can say that TCP/IP is the network architecture of the Internet. In further paragraphs, we will talk specifically about TCP/IP architecture, unless otherwise mentioned.

Abstraction layers

Abstraction layer is a component of a network architecture. It is an abstract term. Each layer has its real equivalent in:

- A software component of a device that operates on that layer.

- A header / footer (or a payload for L7) of the message transferred over the wire.

TCP/IP architecture defines four abstraction layers:

| Layer |

Layer name |

Handled by |

Example protocols |

| L7 |

Application layer |

Applications and libraries |

HTTP, SMTP, IMAP, DNS, DHCP, SSH, LDAP, RIP, BGP |

| L4 |

Transport layer |

Kernel |

TCP, UDP, ICMP, OSPF, IPSec protocols (AH and ESP) |

| L3 |

Internet (network) layer |

Kernel |

IPv4, IPv6, ARP |

| L2 |

Data-link (link) layer |

Driver code (run by OS) and network card firmware |

Ethernet, 802.11 (Wi‑Fi), STP, VLAN, PPP |

Each layer can be viewed as a placeholder for a concrete protocol which operates on that layer. In this sense, a protocol implements (or is implemented on) a layer. It also means that a particular protocol operates on just one layer, and no other – e.g. we can say that TCP is L4 (or transport) protocol.

We can employ distinct protocols on the layers. Protocols are like plugins we can use on the layers. We can choose from a variety of protocols for different purposes. This is the power of the layered model – e.g. we can use TCP if we need reliable communication, or UDP if we don’t care about reliability that much, for example in the favor of fluency. Or we can go one hop over the cable and the next hop over the wireless – again different protocols are used for each hop.

Not all devices employ protocols on all of the layers. Some devices operate only on the Link layer – switches and bridges (let’s consider them synonyms for now), whereas routers operate up to the Internet layer. We say “up to” because the device always has to implement all the lower layers as well. Otherwise, we wouldn’t be able to interconnect them (the wire signal is handled by the Link layer). To summarize things up:

- Switches / bridges implement only the Link layer.

- Routers implement Link layer and Network (Internet) layer.

- End devices (both sending and receiving hosts) implement all layers.

These are schematic symbols of the devices we will use:

You may have noticed that the numbering of the layers in TCP/IP architecture is quite illogical. The reasons are merely historical. In 1984, the so-called ISO/OSI (Open System Interconnection) reference model was designed. ISO/OSI can be viewed as another example of different network architecture, which had 7 abstraction layers, labeled L1-L7. TCP/IP architecture picked only 4 of them but the numbering remained the same. ISO/OSI is a very good example of overengineering, the architecture is not used anymore due to its complexity and ponderousness. However some fragments of the OSI/OSI protocols persisted and influenced protocols which are still in use until today. For example LDAP is an application protocol which is used for accessing a server database (called a directory) of user accounts. Besides other things, the protocol is used for authentication of the users, stored in the directory. The protocol defines common fields which all user accounts are supposed to have defined in the directory. The list of fields is very extensive and includes such fields as telex number or X.121 address (addressing scheme used by ISO/OSI). Fun fact is that, LDAP means “Lightweight Directory Access Protocol”, and was meant as a replacement for older DAP, which was part of the ISO/OSI protocol suite.

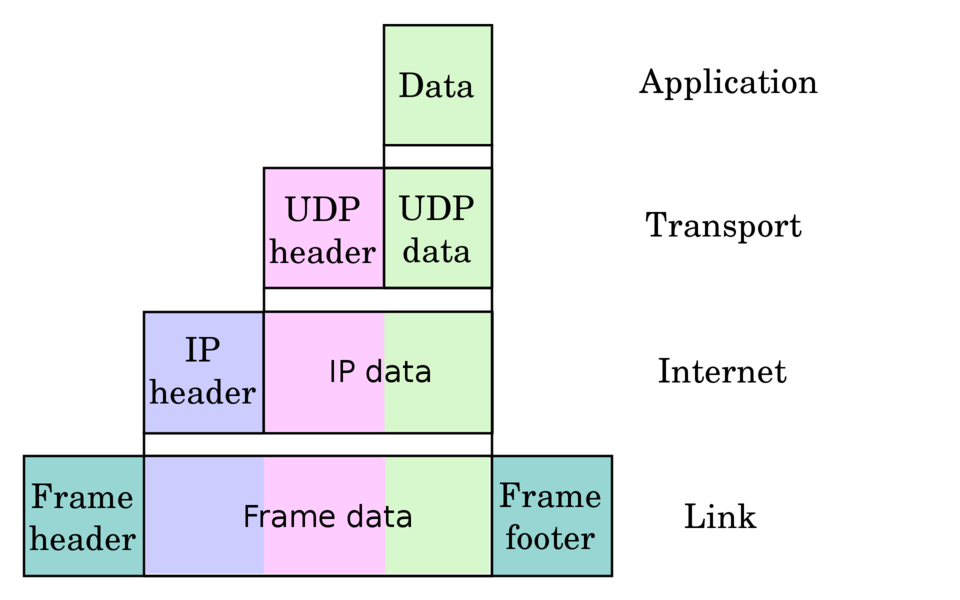

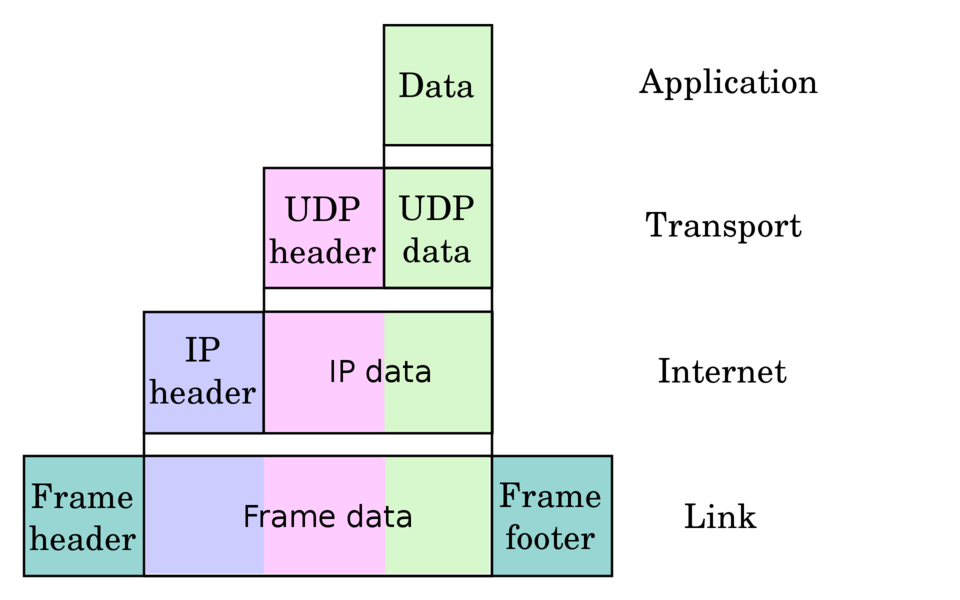

What does it mean “the protocol operates”? The key term is encapsulation. Without it, it would not make much sense to talk about layers at all. The end entities exchanging data are applications running on machines (hosts). This means that if we want to exchange data with another application running on a (potentially) different host, we need to start from the perspective of the originating application: “What does an application have to do, in order to send data through the network?” Let’s say we’re writing an application and we want to send a HTTP request. OK, we will use a HTTP library in our application, and will call some function of the library. But what will the library do? It will formulate a HTTP message (the request) as it talks HTTP-ish – it is a HTTP library. And then? It will call another function of the operating system API to open a TCP socket. And then another function of the operating system to send the HTTP request through the socket (write syscall to the socket). What will happen next? The TCP stack of the kernel will handle the write call and it will prepend the data it received (the HTTP request) with TCP header. The kernel knows what to fill-in to the header as it maintains the connection in the kernel data structures and the connection is identified with the file descriptor passed to the write call. So it is up to the kernel to fill the TCP header – it partially uses parameters passed when creating the socket (e.g. the port number of the remote host) and partially uses parameters gathered in runtime (e.g. TCP seq number). The same repeats one layer below – the kernel prepends IP header to the TCP segment. And once more on the network card driver level – it prepends Ethernet header to the IP packet, and appends Ethernet footer after the IP packet (the main purpose of the footer is usually to carry a CRC). Finally, it sends the resulting Ethernet frame over the wire in some kind of binary encoding specified by the Ethernet protocol.

The stacking of the headers on the top of other ones is called encapsulation. The data which are encapsulated on a particular layer are called payload. E.g. from the perspective of IP protocol, the TCP segment (HTTP request with the TCP header prepended) is a payload, which is then prepended with IP header on L3. The layers are isolated in the sense that each layer does not touch (do not change) the payload which it was given by the previous layer. It only prepends (appends) its own header (footer) to the payload.

Below is an illustration of encapsulated data. To map it to our previous example, imagine the HTTP request as “Data”, TCP instead of “UDP”, and Ethernet as “Frame”. What is on the “Link” layer is eventually sent over the wire.

We have described how the message is assembled when it is sent from a host. We should also mention what happens with the message in transit.

As you would expect, when the frame is received by an intermediate device, it first processes the frame on the link layer, i.e. it reads the frame header and footer, and decides what to do with the payload, based on the parameters read from the header / footer. If the payload is passed to the upper layer, this repeats once again with the payload.

In general, when a message is passed on a layer, one of the following actions is performed:

- The payload is processed on the current layer and is passed to the upper layer. Note that only the payload is passed to the next layer, the header / footer are consumed by the current layer (this is called decapsulation). If we are already on the Application (top most) layer, the message is delivered and no further processing is performed.

- The message is processed on the current layer and the processing ends here. The payload starts to be passed to lower layers and is being encapsulated. If we are already on the Link (the lowest) layer, it is, after L2 processing, sent over the wire.

- The processing on the current layer might be destructive – in this case, the original header / footer might be replaced by a new one (e.g. transition from one L2 protocol to another L2 protocol).

- Or the header / footer of the current layer may be kept intact.

- The message is dropped (either by firewall rules, or because it has to be dropped to obey the protocol – e.g. received Ethernet frame with MAC address different than ours).

Which action is performed is given by several factors:

- The parameters filled in the header / footer.

- The concrete protocol which is placed on that layer.

- The capabilities or type of the device.

- The configuration of the device.

- Etc.

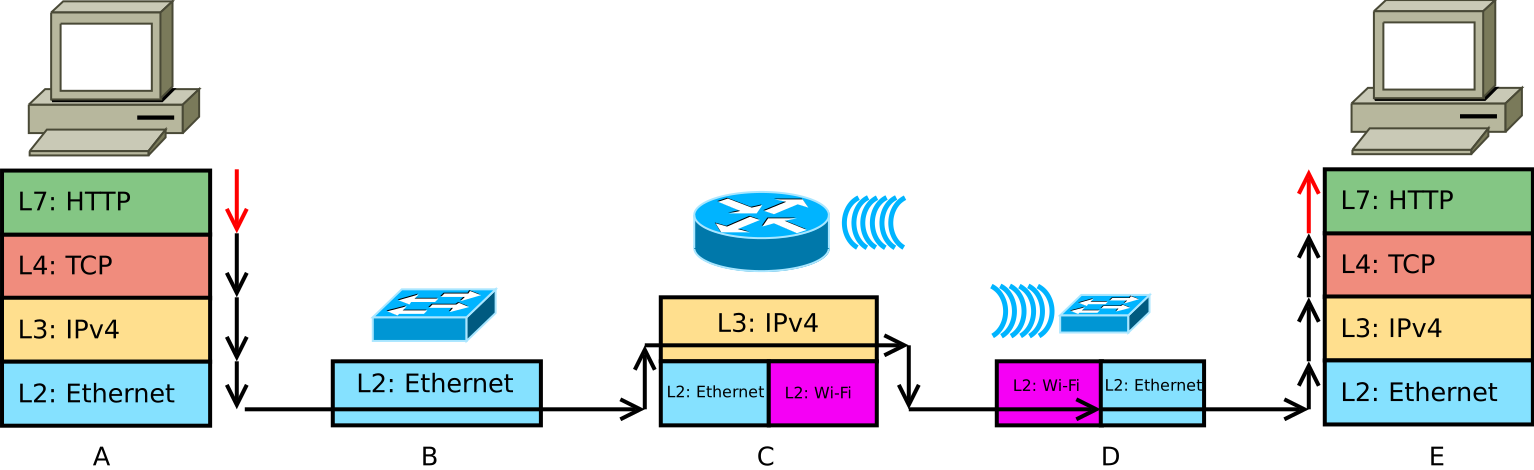

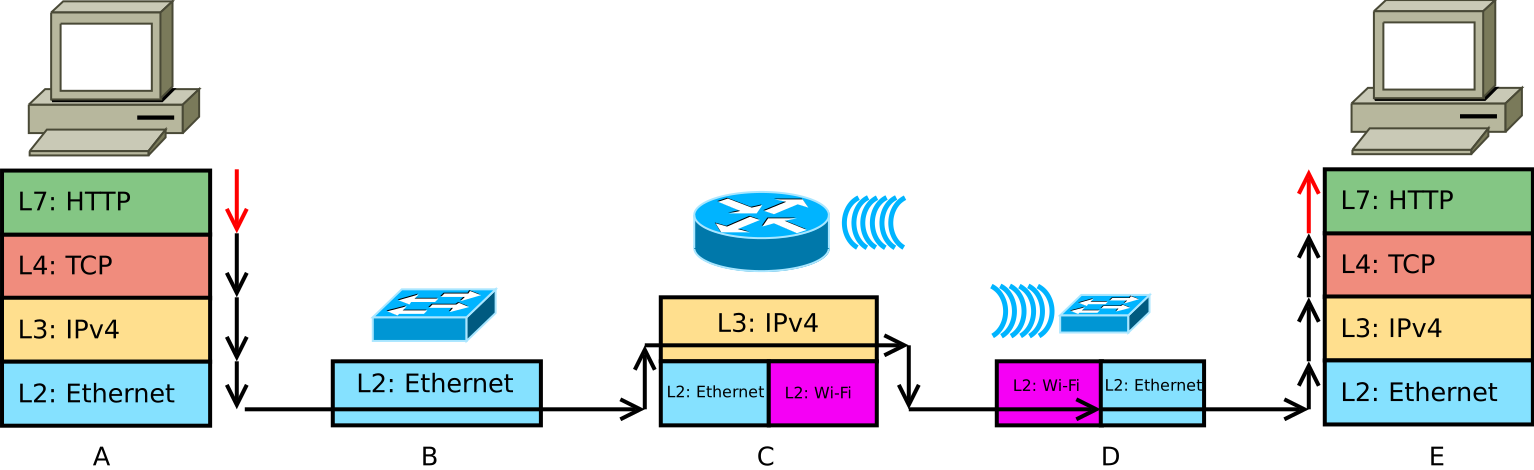

Here is an example of the message flowing through a network through distinct layers:

- On host A, all layers are examined one by one, after a frame similar to the one shown in the previous figure is produced and sent over the wire.

- On Ethernet switch device B, the Ethernet frame is handled according to rule 2. B. (Bridged, based on the Ethernet header, through the switch ports and kept intact.)

- On router device C, the Ethernet header is processed on L2, the Ethernet header and footer are stripped, and the payload is handed over to L3, according to rule 1. On the next layer, the packet is routed through the router, according to IP protocol. Here it ends its processing, so the packet is passed to the lower layer, this time occupied by a different protocol (802.11, better known as Wi-Fi). This is according to rule 2. B.

- On switch / wireless bridge device D, the frame is processed according to rule 2. A: The Wi-Fi header / footer is processed on L2 and then is stripped. Some parameters of the original Wi-Fi frame are re-used by the bridge to assemble a new Ethernet frame, so the payload is placed into this frame and sent over the wire. In this case, the processing ends on L2 but the processing is “destructive”.

- The same as for host A, only in the reverse order. The payload is decapsulated up to the application layer, where the message is delivered.

Communication protocol

A communication protocol could be described as a set of rules which two sides need to obey if they want to communicate with each other. The rules govern several aspects of the communication, most importantly every protocol defines its own data format of the messages exchanged between the two sides. ABNF or similar formal grammar is usually used for the description of protocol elements (parts of the protocol messages). The protocol also defines the algorithms used by the two sides – often a finite state machine (FSM) is used to describe the state of the communication peer in the context of the protocol session. By receiving or sending a message, the current state of the peer is advanced to a next state, based on the FSM. A protocol might mandate a particular protocol on the lower layer, e.g. HTTP is run over TCP on the transport layer, and TCP port 80 is reserved for HTTP. Many other aspects of the communication can be defined by the protocol, such as error error detection mechanisms, routing of the messages, etc.

Internet protocols are usually published in so called RFCs (Request For Comments). These are documents published mostly by IETF (Internet Engineering Task Force) on the RFC editor website (authoritative source) or directly on the IETF website. Every RFC is submitted as plain ASCII text and is published in that form (that’s why it is considered by some people as ugly).

Every RFC has a unique number identifying it. Whenever a change is made to the RFC, it is assigned a new number. E.g. RFC 2616 defines HTTP protocol (version 1.1).

As we already said, protocol is assigned to an abstraction layer and the protocol operates only on that layer. Some publications make the assignments based solely on the purpose of the protocol. E.g. we can read that RIP is a L3 protocol, because it is a routing protocol, and routing occurs on L3. That is one possible point of view, but we will rather stick to a more strict view, taking the encapsulation into account. Because RIP uses UDP as its transport protocol, it is L7 protocol beyond all doubt. So unless you use it in a more advanced setup (such as RIP over some kind of VPN or tunneling generally), it will always have some L2, L3, and L4 protocols below itself, i.e. the encapsulation sequence could be for example: Ethernet, IP, UDP, and RIP.

Protocol implementation

This term is quite self-explanatory. A protocol can be also viewed as a “manual” for software engineers who want to implement it in software eventually. So protocol implementation is a concrete software component of either the network card driver, operating system, or application library, depending on which layer the protocol operates. Note that whereas a protocol is just an abstract definition of some rules written on the paper, the protocol implementation is written in a concrete programming language, for a concrete platform. For example an Ethernet driver, usually written in C language, for the same network card will be different for Linux, and different for Windows. That’s because both operating systems have different API (and ABI) for drivers. Of course, the implementation of IP or TCP protocols will be completely different for Linux and e.g. for Windows, as they are both part of the kernel.

The situation is a little easier for application protocols that build on the top of TCP and UDP protocols. The reason is Berkeley sockets API (or also BSD sockets API), which is exposed by all modern operating systems in order to allow applications to communicate over the network. The API was first introduced in 1983, and since then it has become the de facto standard. The API defines several functions and its semantics to create, and manipulate so called sockets, which represents one end of the network connection with the remote host. No matter whether the connection is a TCP connection or represents UDP remote peer, these differences are reflected as parameters of the socket. On Linux, these API functions are part of the C standard library, and mostly the functions are just wrappers around system calls, which are the interface to the Linux kernel. In theory, if all the operating systems would implement the same API, the code could be portable – at least in theory. In practice, there are differences between the operating systems. There is a short article summarizing the differences in the socket APIs between Linux and Windows.

Network stack

Network stack is another umbrella term on the other side of the barrier. It could be defined as a collection of protocol implementations for a particular platform, taking concrete network architecture into account. E.g. we can refer to the TCP/IP stack of Linux, or some embedded system. In a broader sense, it can be defined as entire software equipment, related to networking, available on a particular platform.

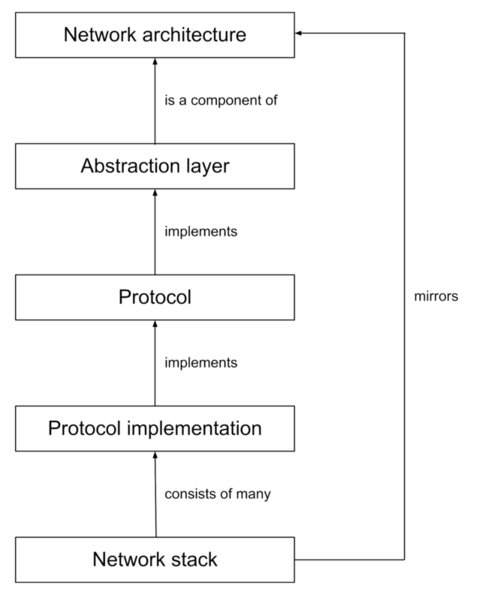

Here is mind map of all the terms we have mentioned so far:

IP addresses and subnets

In this paragraph, we will recap IP address format and basics of IP subnetting.

Internet protocol (IP), version 4 (IPv4), which is used on L3 by TCP/IP architecture, uses IP addresses for identification of the hosts within the network. End hosts have usually at least one IP address assigned, whereas routers (intermediate nodes) have usually at least two IP addresses assigned. Each IP address should be assigned just once in the network and only to one host.

IPv4 address is a sequence of 4 bytes. We write IP addresses in decimal, each byte divided by dot, e.g. 10.7.130.63. Each byte (by definition) can be of value between 0 and 255 (inclusive). There are two special addresses – 0.0.0.0 and 255.255.255.255. The former means „unknown IP address“ or „any IP address“ (can be used for example when binding a listening socket to particular address – means bind to all IP addresses assigned to the machine) and the second one is „broadcast IP address“, and means „send packet to all IP interfaces on the machine“.

Since 1993, the so called network mask is explicitly part of an IP address. Network (or subnet) mask is a 32 bit long binary sequence with a continuous prefix of ones at the beginning, the rest are zeros. E.g. (in binary) 11111111.11111111.11110000.00000000 – we put a dot after every byte by convention, similarly like an IP address. More frequent notation is decimal: 255.255.240.0 (we have just converted the above numbers from binary to decimal). And probably the most frequent notation is called CIDR notation, which is simply the IP address itself, followed by slash and decimal number (the mask) indicating the count of ones (the length of the prefix) in the mask, like: 10.7.130.63/20.

The subnet mask divides the IP address into two parts: subnet ID and host ID. Subnet ID identifies the subnet (set of devices interconnected together on L2), and host ID identifies a particular device in that subnet. If we apply binary AND to an IP address and its mask, we will obtain the subnet ID. All hosts in the same subnet have the same subnet ID. For example, these two distinct hosts: 10.7.130.63/20 and 10.7.141.63/20 belong to the same subnet, but this one 10.7.145.63/20 belongs to a different subnet. The subnet ID of the first two is 10.7.128.0, whereas the subnet ID of the third one is 10.7.144.0.

The first valid address in a particular subnet is reserved, and is called subnet ID (see above). The last valid address in a particular subnet is reserved for subnet broadcast, for example address 10.7.143.255/20 is broadcast address for subnet 10.7.128.0/20. A packet with subnet broadcast address filled as destination IP address is determined for all hosts in the given subnet. All IP addresses in between subnet ID and subnet broadcast address can be assigned to the hosts of that subnet.

IP addresses are quite easy to read when the subnet mask ends on the boundary of bytes. In other cases, it depends on how quickly you are able to convert binary numbers, or think in a binary system in general. If you are not sure, use a subnet calculator.

Let’s go through an example. For example this IP address 10.7.100.5/29 is part of a subnet which has:

10.7.100.0/29 subnet ID10.7.100.7/29 broadcast address10.7.100.1/29 - 10.7.100.6/29 usable addresses for hosts in that subnet

The next adjacent subnet is:

10.7.100.8/29 subnet ID10.7.100.15/29 broadcast address10.7.100.9/29 - 10.7.100.14/29 usable addresses for hosts in that subnet

And so on.

Useful commands ⚓

ip command ⚓

The ip command is the Swiss-army knife command for networking. It is used to

configure network interfaces, for assigning IP addresses on them, for altering the routing

table, etc. The general syntax is:

ip <object> <action> <parameters>

Object is the kind of object you want to operate on (e.g. link,

neighbor, address, route, etc.). Action can be e.g. show, add,

change, delete but is context-dependent on the object word (i.e. not all

actions are valid for all kinds of objects).

Object words can be abbreviated. E.g. ip address to ip addr, or even ip a. So you

don’t have to type that much.

Each object kind has a default action, which is mostly show. So if you type ip link, it is synonym to ip link show.

Note that all changes made using this command are not persistent across

reboots. In order to make them persistent, use a daemon such as

systemd-networkd and make it start upon boot. However this command is good

for testing your setup, or when you need to do a volatile change of your

network configuration.

Useful examples: ⚓

ip link – shows the list of available network interfaces.ip link set <ifname> {up,down} – sets an interface up or down (like on/off switch

for that interface).ip neigh – lists the contents of the ARP cache table.ip addr – shows the list of network interfaces with IP addresses assigned on that

interfaces.ip addr add <IP address>/<mask> dev <ifname> – assigns IP address to an

interface. E.g. ip addr add 10.0.0.1/24 dev eth0.ip route – prints a routing table of the host.ip route add 0.0.0.0/0 via <gateway IP address> – adds default route to the

routing table. 0.0.0.0/0 can be replaced with default keyword. A gateway

associated with this, so called default, route is called default gateway. So

for example ip route add default via 10.0.0.1.

Diagnostic commands ⚓

-

ping <IP address or hostname> – sends ICMP echo request to the specified

host and waits for ICMP echo reply. It is useful to test whether the other

side is alive and reachable through the network.

-

traceroute <IP address or hostname> – investigates and prints the network path to

the remote host. Its output is the list of routers (their IP addresses) along the

network path to the host. It uses TTL field of the IP

header. To investigate the

first router, traceroute sends an IP packet with TTL=1, for the second router

another IP packet with TTL=2, etc. It expects that the router responds with ICMP

time exceeded message. From that response traceroute is able to detect the IP

address of that router.

-

ss – lists sockets . Useful for monitoring services running on the hosts, e.g.

which application listens on which port, etc. See man ss for more information.

Rule of thumb ⚓

For two hosts to be able to communicate:

-

Either must be connected to the same switch(es) and have assigned IP addresses from

the same subnet range.

-

Or they can have assigned IP addresses from different

subnets but then they have to be connected through router(s). And the first rule

applies to the router(s).

MAC addresses ⚓

- Are 6-byte identifiers assigned to each network interface. E.g.

02:42:d6:c9:26:27.

- They are baked in the network card by the manufacturer. However some drivers

allows you can change your MAC address.

- In one LAN, all devices connected through switches must have a unique MAC

address. Otherwise, evil things happen. To avoid collisions, every

manufacturer is assigned a prefix (the first 3 bytes) of the MAC address (or

multiple prefixes) and is responsible to manufacture network cards with that

prefix and a unique suffix.

Switches vs routers ⚓

Switches do not have MAC addresses (smarter switches might have but it is only the

management MAC address used to access a configuration interface of the switch). It is

because switches just receive an Ethernet frame on some port and based on the

destination MAC address in the Ethernet header, forward the frame to another port.

The switch maintains a forwarding table (MAC to port number mapping) and

forwards the Ethernet frames based on the table. It does not need to have a MAC

address assigned for doing that. The switch fills in the forwarding table by

so-called learning and

flooding,

which happens automatically. That’s why the process is called transparent

bridging (or switching) and a switch does not require any configuration in

order to do the basic switching.

Routers have MAC addresses assigned on each interface because they need to be

able to receive Ethernet frames on that interface and to be able to pass the

payload to the upper layer. From the point of view of Ethernet, the traffic

ends on the router (is destined to the router). So the Ethernet frame is

decoded, the original header is stripped, and the packet is then routed by the

IP protocol to another interface of the router. There it is encapsulated in

another Ethernet frame, this time with the destination MAC address of the next

hop (which can be either the target host or another router on the network

path). The processing of the IP packet by the IP protocol is called routing.

This is link path to from one host to another on L2 (from the Ethernet perspective):

And this is network path to from one host to another on L3 (from the IP perspective):

ARP ⚓

- Stands for Address Resolution Protocol and is used to translate IP addresses to MAC

addresses.

- When you send a message, you need to know the IP address of the remote host in

advance. For example, when you

ping 10.0.0.5, the kernel needs to know what to

fill into the Ethernet frame header—which destination MAC address. So we need

some mechanism how to resolve the right MAC address. And that’s the task for ARP.

- What destination MAC address should be put in the frame? It depends, and our rule

of thumb will help us to answer that. If the target host is on the same IP subnet

and connected to the same switch(es) (rule 1), ARP is used to resolve the MAC

address of the remote host (in our example

10.0.0.5) directly. If the remote host

does not belong to the same IP subnet, a gateway to that subnet is resolved using

routing table, and then the MAC address of the gateway is resolved using ARP

instead.

- ARP uses Ethernet broadcasts to resolve the target MAC address. It sends ARP

requests, in the form: “Who has 10.0.0.1? Tell 10.0.0.56”, to the destination MAC

ff:ff:ff:ff:ff:ff, which means all hosts, connected to the same switch(es) will

receive that frame. And the host with the IP address 10.0.0.1 sends the response

with its MAC address.

- Each host (and router) maintains a so-called ARP cache. It is a table which contains

mapping from IP addresses to MAC addresses. If the mapping is already in the cache,

the ARP request is not sent.

- You can list (or edit) entries of the ARP cache using the

ip neigh command.

IP routing ⚓

Whenever an IP packet is sent from a host (either a station or a router), the

host must determine a path to the target network, based on the destination IP

address of the packet. As we already said, either:

- The packet is sent to the target directly. In this case the target belongs to

the same subnet as the host. In this case, the ARP tries to resolve the MAC

of the host, from the packet destination IP address directly.

- Or the host belongs to a different network and we need to find a gateway to the

network. Then the MAC address of the gateway is resolved using ARP. The

process of finding the right gateway is called routing decision.

The router maintains routing table in its memory; contents of the routing table

determines the routing decision. The rows (entries) of the table are called

routes. The table can be configured manually by network administrator—this is

called static routing—or can be dynamically updated by routing protocols

(such as BGP or OSPF)—this is called dynamic routing.

Each route contains:

- Subnet ID

- Subnet mask

- Gateway (IP address or the interface name)

- Metric (integer)

Example of a routing table:

$ ip r

default via 10.0.2.1 dev veth2-3 10.0.1.0/24 dev veth2-1 proto kernel scope link src 10.0.1.1

10.0.2.0/24 dev veth2-3 proto kernel scope link src 10.0.2.1

10.0.3.0/24 via 10.0.1.1 dev veth2-1 10.0.4.0/24 via 10.0.2.1 dev eth2-3

When an IP packet is sent from the host, the routing decision is done like this

(pseudocode):

void routingDecision(packet) {

// routingTable is sorted primarily by IP prefix (most concrete prefixes comes

// first), and secondarily (if two entries have the same prefix) by the metric

// (numeric weight) associated with the routing entry.

for entry in routingTable {

// & denotes binary AND.

if (packet.destinationIP & entry.mask == entry.subnet) {

if (entry.gateway == NULL) {

// (1) In this case, our destination host lies

// in an IP subnet reachable directly by this

// router.

routeTo(entry.interface);

} else {

// (2) In this case, we have to go to the

// destination subnet via another gateway

// (router).

routeTo(entry.gateway, entry.interface);

}

return;

}

}

replyWith(ICMP_NoRouteToHost);

}

The most general route, which always matches, is 0.0.0.0/0, and is called the

default route. Gateway associated with this route is called the default

gateway. When we set up a router in a private network, this route often points

to the public Internet.

The Gateway field is the IP address of another router in the destination subnet.

Therefore the following must hold for each route: route.gateway & route.mask == route.subnet

You might notice that we distinguish two cases in the routing decision – (1) and (2).

In the first case, the router can deliver the packet directly to the destination

network, because the router is a member of that network. It means that we don’t have

to send the packet to any other router, because our router can reach the target

destination over L2.

From the perspective of L3, a network interface is not physical adapter (port)

anymore, but an IP address assigned on some L2 interface (adapter, or port). So

L3 interface is uniquely identified by the IP address assigned on some L2

interface. One L2 interface can have multiple IP addresses assigned. Sometimes

we refer to L3 interface with the IP address, sometimes by its name

(corresponding to L2 interface).

Assignment of an IP address to some L2 interface opens the possibility to

unwrap L2 frames and pass them upwards to L3, and vice-versa. Also, when one

assigns an IP address to an L2 interface, an entry in routing table is

automatically created by the kernel for the subnet which corresponds to the

assigned IP address. This route is called connected route.

For example, when we add an IP address 10.7.130.63/20 to L2 interface named

eth0, kernel will automatically create connected route to the routing table:

10.7.128.0/20 via eth0 proto kernel

Connected routes never have gateway filled in, so when such route matches the

incoming packet, it always goes through code path (1) during routing decision,

because when we assign an IP address, the router becomes part of the subnet defined by

the assigned IP address, so the subnet becomes directly reachable by the router.

See the proto kernel option in the routing table. It says who (which protocol) added

the route. proto kernel is reserved for connected routes and other routes added by

kernel. proto dhcp Means that the route was assigned from the DHCP server (usually

default route). proto bgp or proto ospf identifies dynamic routing protocols.

IP forwarding ⚓

By default, in a majority of systems, the kernel is configured in the way the host

acts as an end device (from the perspective of L3), and it refuses to forward IP

packets (like routers). So unless the incoming IP packet is destined for the device (IP

does not match), the packet is discarded.

Note that if the target host has multiple IP addresses assigned, it is allowed

(according to IP protocol) to receive a packet (and respond to it) through a different

L3 interface than it was destined for. For example: the packet has destination address

192.168.2.1. It is allowed to receive the packet on a different interface, which has

e.g. IP address 192.168.1.1 assigned; it can be either a different L2 interface. And

the destination host is also allowed to respond through that (or maybe even different)

interface.

In order to tell the system to forward IP packets, IP forwarding has to be

enabled in the system’s configuration. On Linux, the kernel’s network stack is

the responsible for that, so we naturally enable IP forwarding via sysctl

command. We can enable (or disable) IP forwarding per L2 interface (e.g.

net.ipv4.conf.eth0.forwarding) and also globally for whole network stack

(net.ipv4.ip_forward or special option net.ipv4.conf.all.forwarding).

To actually enable the forwarding, it is enough to enable it per interface. The

decision whether the packet should be forwarded or dropped is done upon packet

receive, so the forwarding has to be enabled on the receiving interface. But be

careful, usually the response to the original packet is received vice-versa –

via the interface through the the original packet was sent. For example: To be

able to receive a packet on eth0 and forward it to eth1, forwarding for

eth0 must be enabled. But to be able to also receive a response packet on

eth1 and forward it to eth0, also forwarding for eth1 must be enabled.

Note that global settings (net.ipv4.ip_forward and net.ipv4.conf.all.forwarding)

doesn’t need to be enabled. But they behave in a special way: If any of them is

changed, all other forwarding sysctl options (including all of the per-interface ones)

are changed accordingly. If the device is meant to be a router, it is natural to

enable the forwarding for all interfaces, using the global options.

We can enable forwarding using this command:

sysctl -w net.ipv4.ip_forward=1

VDE ⚓

- Stands for Virtual Distributed Ethernet

and is capable of emulating Ethernet switches in software.

- Compared to the more Linux native approach (bridging using the bridge kernel

module), VDE can be run without root privileges as it runs as a user application.

vde_switch command is used to emulate an Ethernet switch. Each running instance of

the command represents one switch.- It uses UNIX-domain

sockets

to establish connection between the VDE switch and another application (e.g. QEMU).

Running the VDE switch:

vde_switch -sock /tmp/my_switch.sock

Connecting a QEMU VM to the VDE switch:

qemu-system-x86_64 -nic vde,sock=/tmp/my_switch.sock

Note that by default, the VDE switch runs in foreground. If you hit the Return

key, you invoke the management console of the switch.